3D Reconstruction using Motion Vectors

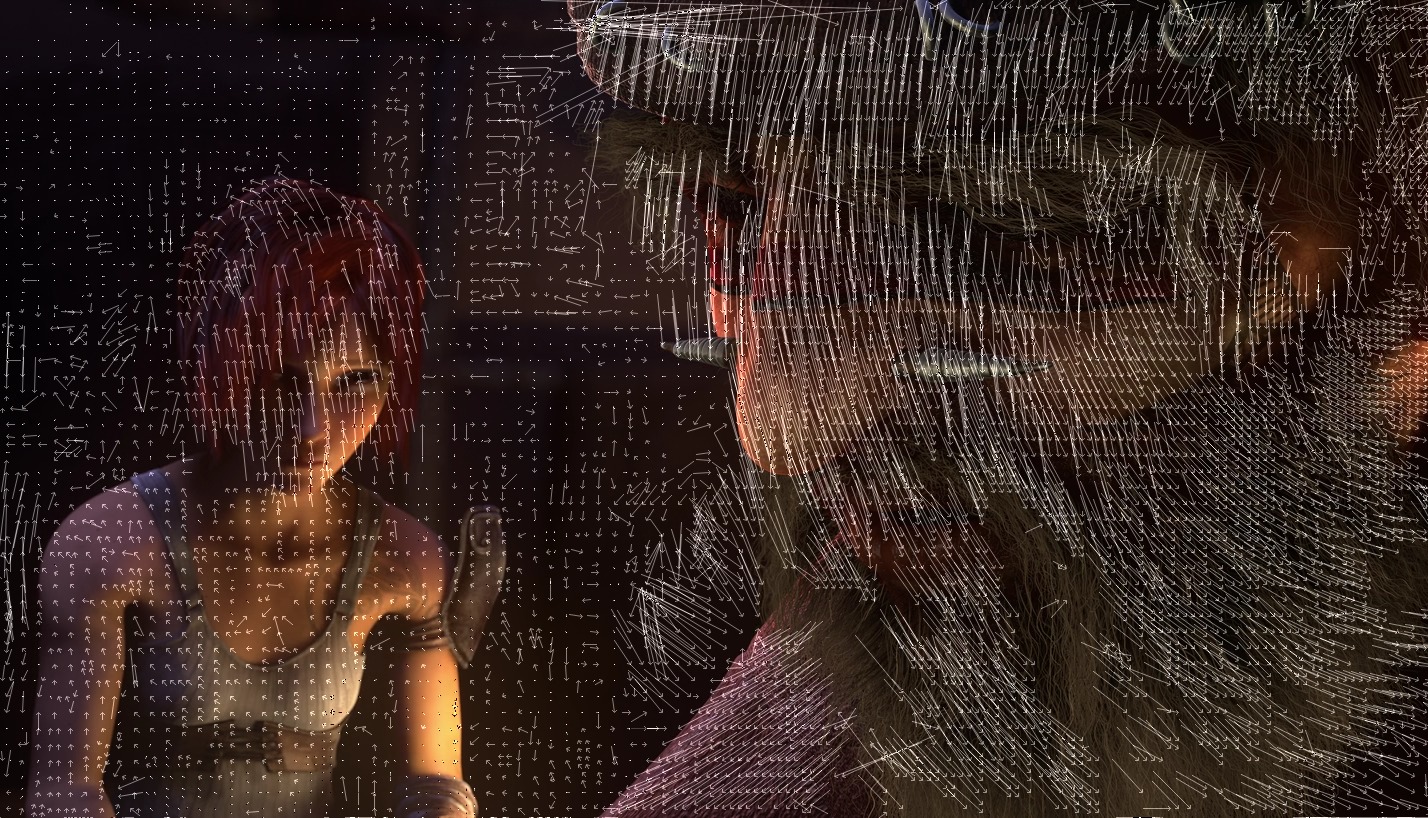

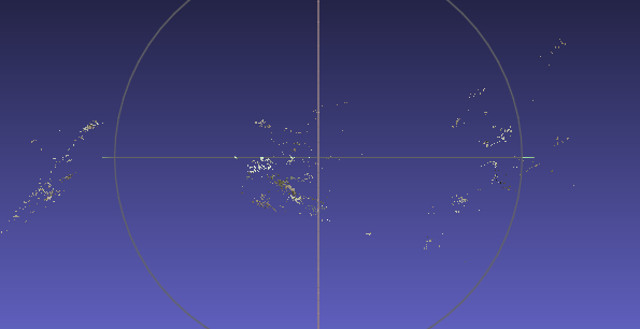

ETH Zurich Spring 2015This project was completed as a semester capstone for the 3D Photography course at ETH Zurich. Video compression algorithms use motion vectors to identify where groups of pixels (macroblocks) move from frame to frame. The motion vectors signify point correspondences between frames when the camera or the scene is moving. As described by the Structure from Motion algorithm, point correspondences are required for 3D recontruction -- highly salient points are identified in each image, these points are matched to corresponding points in the other images, the locations of the cameras are calculated, and a dense reconstruction fills in the rest of the points. Instead of matching point correspondences between images, the idea of this project was to use motion vectors from a video to create point correspondences instead. Our team used the ffmpeg library to extract motion vectors from videos of stationary scenes and a moving camera. Then we used these motion vectors to identify point correspondences between frames and create a 3D reconstruction. Unfortunately, our project was unsuccessful and our reconstruction was extremely sparse.